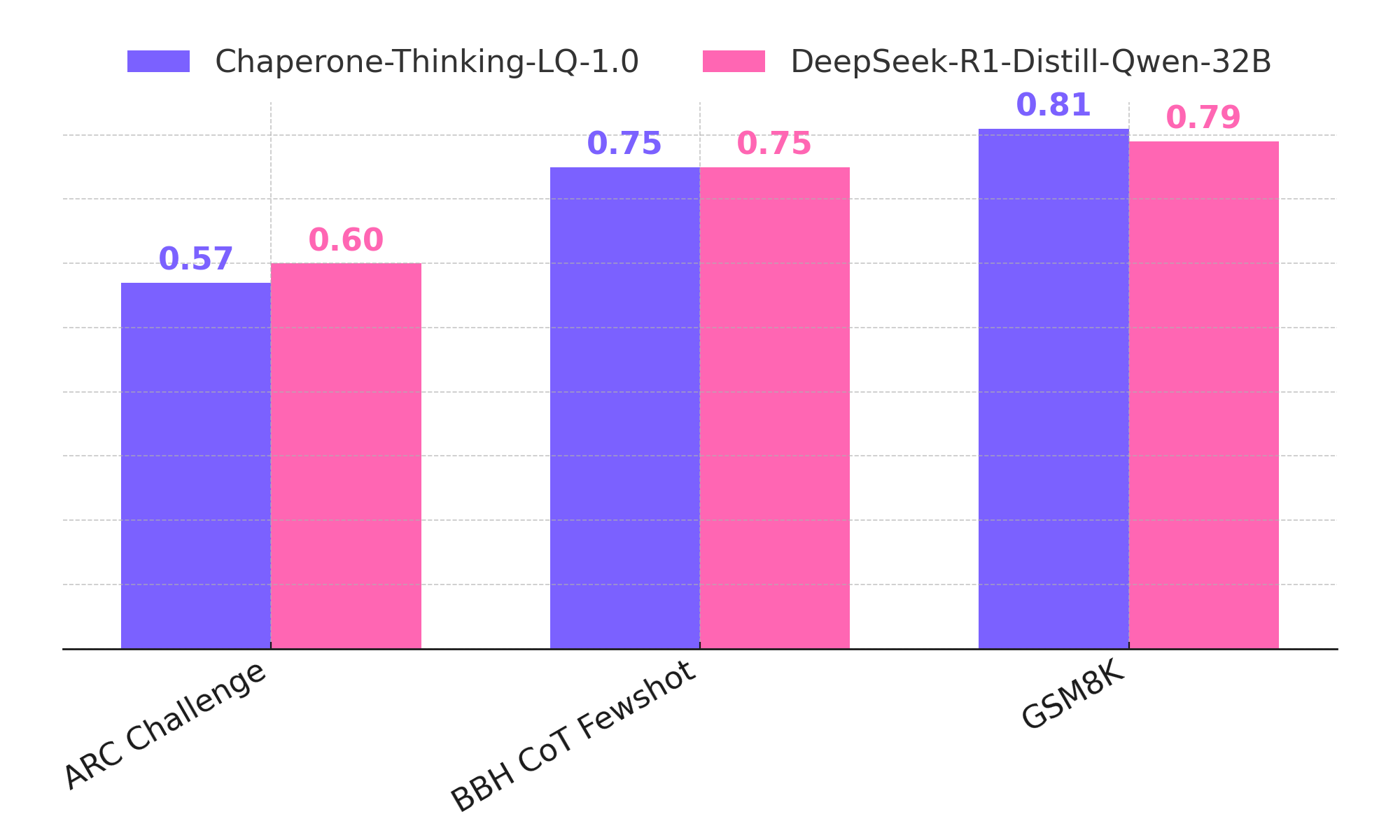

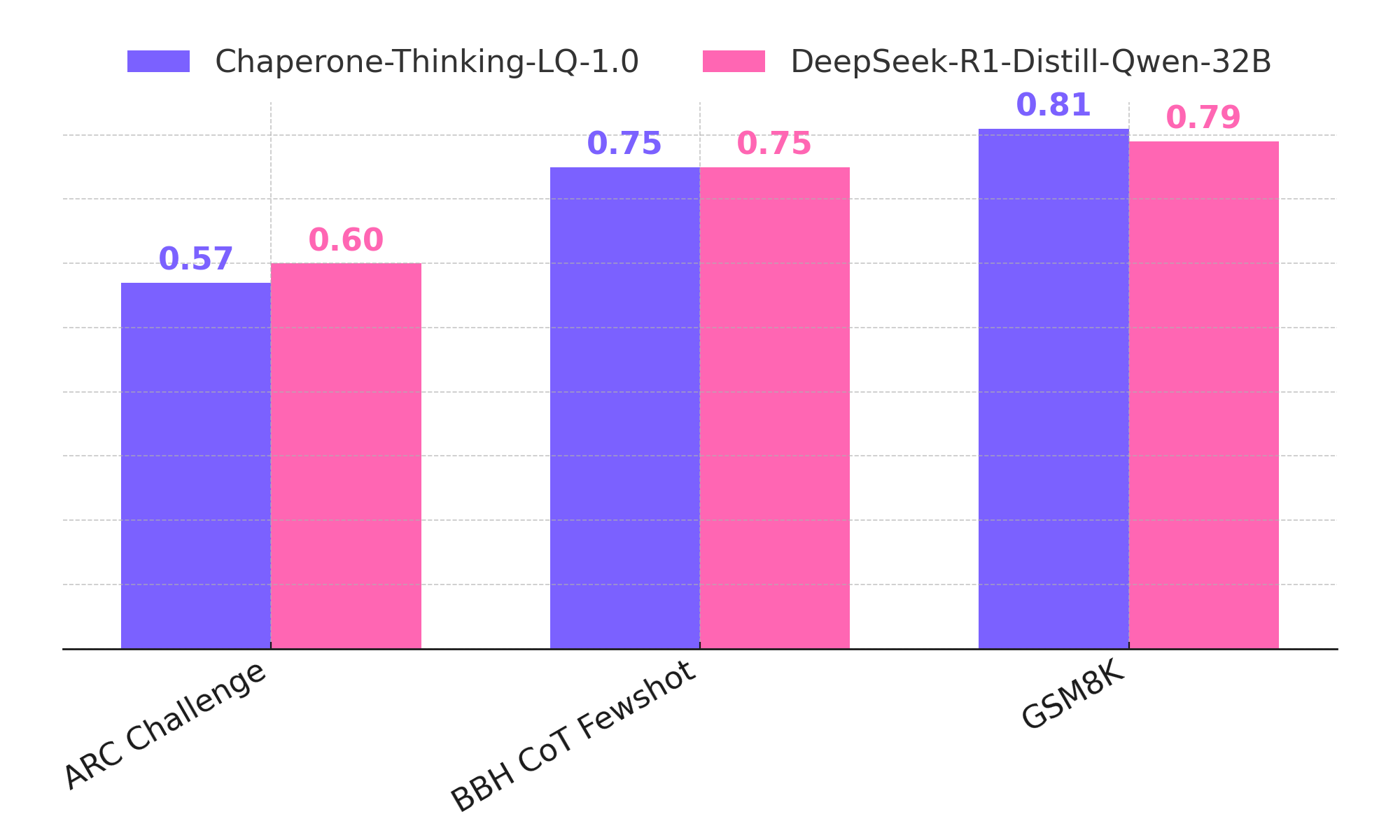

Chaperone-Thinking-LQ-1.0 delivers world-class reasoning performance — rivaling the best, while being 1.6x faster.

Chaperone-Thinking-LQ-1.0 is our new LLM engineered for deep reasoning, scientific precision, and real-world deployability. Despite its lightweight, it matches — and in some benchmarks even rivals — the performance of today’s leading reasoning models from OpenAI and DeepSeek. Tested on rigorous datasets like AIME 2024, MATH-500, GPQA Diamond, and MMLU, it delivers state-of-the-art results while remaining efficient and cost-effective. Ideal for research workflows, technical assistants, and high-stakes enterprise applications.

In our side-by-side tests, Chaperone-Thinking-LQ-1 consistently responded faster than DeepSeek-R1-Distill-Qwen-32B, delivering answers in about 11–12 seconds versus roughly 20 seconds for the alternative—around 1.6× quicker overall. While the other model tended to produce slightly longer replies, Chaperone-Thinking-LQ-1 still provided results notably sooner, offering a snappier, more efficient experience for everyday use.

| Benchmark | Metric / Task | Chaperone-Thinking-LQ-1.0 | DeepSeek-R1-Distill-Qwen-32B |

|---|---|---|---|

| Speed & Latency | Throughput (tok/s) | 36.86 | 22.84 |

| Latency p50 (s) | 11.49 | 20.10 | |

| Latency p95 (s) | 13.06 | 20.11 | |

| Tokens / Request (avg) | 435.4 | 459.0 |

Averages over 10 trials, with concurrency=1, max_tokens=512, and temperature=0.

Chaperone-Thinking-LQ-1.0 excels in generative tasks and accuracy-based evaluations, particularly in GPQA benchmarks.

Demonstrates superior performance in complex reasoning tasks requiring deep understanding and logical processing.

Optimized for quick processing with minimal overhead, delivering reliable results at high speed across tasks.